The New Enigma Camera — Optimizing Metadata for UAP Sightings

One of the exciting things about crowdsourcing UAP data is tapping all the potential that exists with over 6 billion smartphone users on the planet today. The human eye is an incredible sensing device. It can sense a single particle of light in a dark room, it can identify objects seen for less than 15 milliseconds. Our eyes instinctively understand and interpret the world around us. Smartphones can do even more. They are amazing pocket computers that can do things the human eyeball cannot. They can store and share media, they connect viewing experiences across continents and countries, and most importantly, they assign metadata to every media file captured – location, date, timing. This metadata can be useful for scientific analysis of Unidentified Anomalous objects in the sky. Let’s dive in.

What is smartphone video metadata?

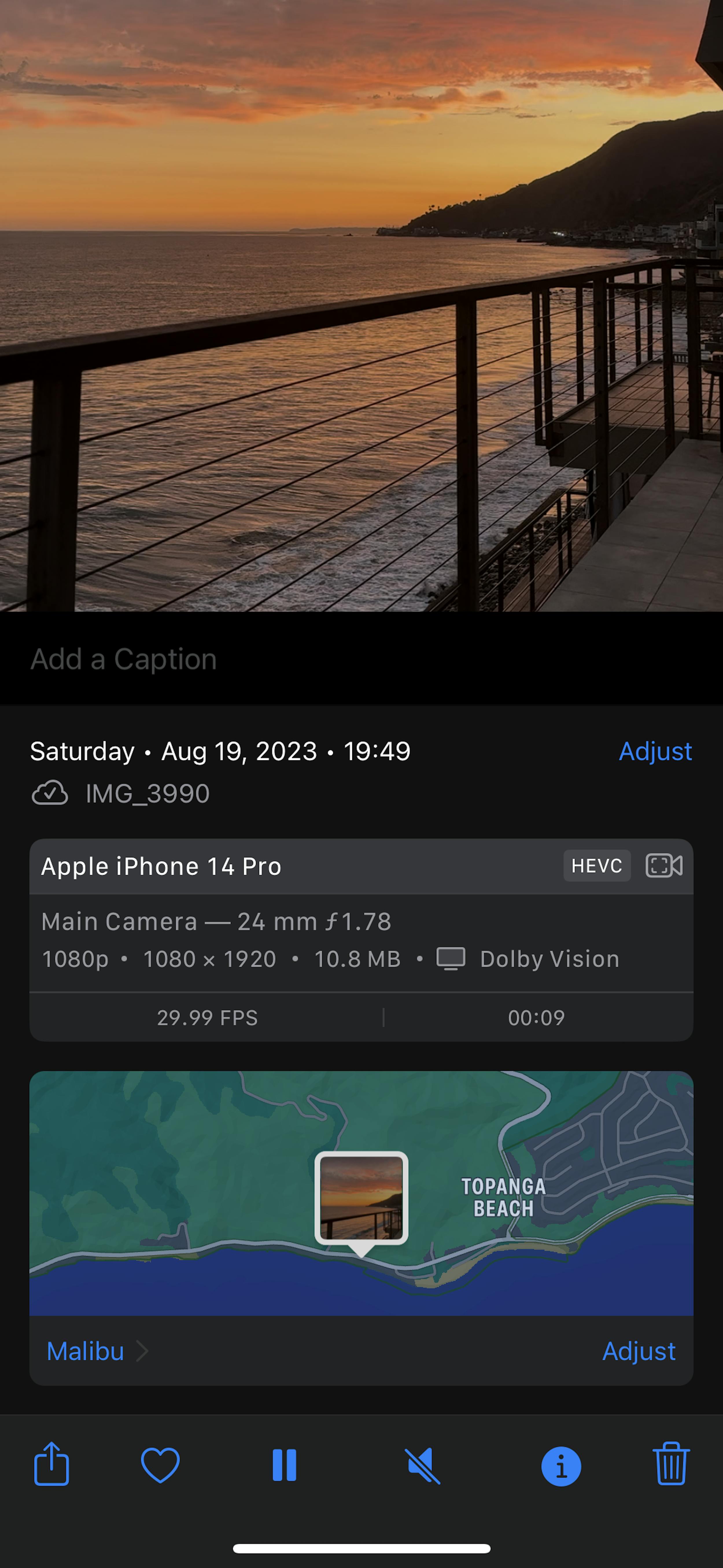

Smartphone video metadata consists of all information about a digital video file - the author, date created, location when recording began, the camera’s intrinsic parameters and information about modifications. For each video file, there is an initial, file-wide set of metadata information. Many apps use smartphone video metadata to understand the content of the media shared. Using smartphone video metadata for analyzing UAP has its challenges. Standard metadata – time, date and GPS information – can be used to roughly organize and corroborate sightings. However, this standard video metadata only captures metadata at the start of filming. If a person is walking or driving while recording UAP, the location metadata would be inaccurate after the first frame captured. This causes errors when trying to plot the path of UAP or understand the speed of UAP from smartphone videos when the creator is moving.

Standard smartphone camera metadata

Specialized Enigma camera metadata

The Enigma Camera is Optimized for Metadata

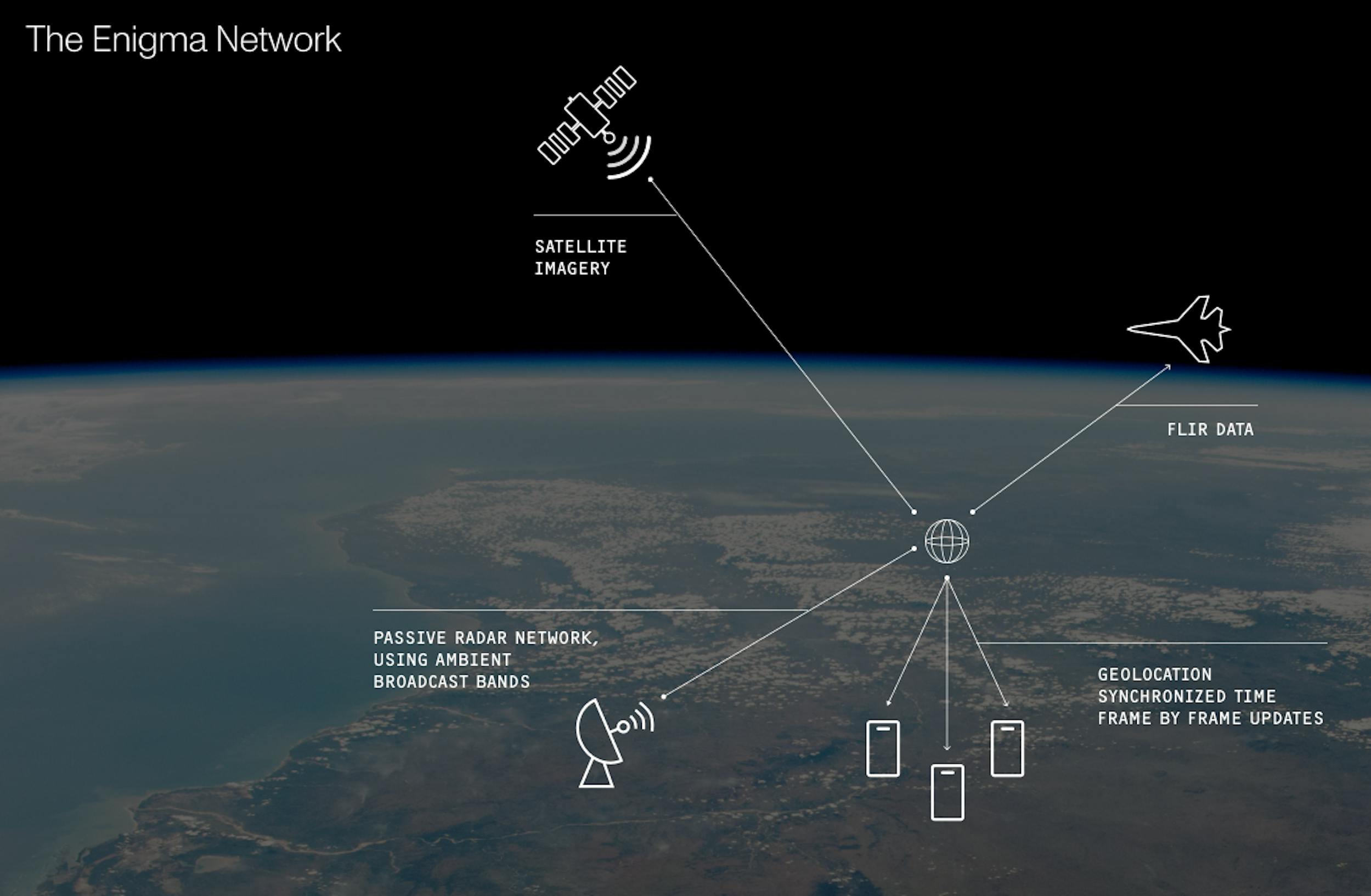

Because of these challenges, we’ve developed a native camera in our app to mitigate the potential for error. The Enigma Camera captures metadata frame by frame. This means that each moment of any video has a clear location, date and time, and device orientation in each frame of the actual video file. This makes the data uniquely suited for the study of UAP, because it allows our team to have confidence in the speed, path and timing of recorded UAP. It also enables us to corroborate multiple discrete submissions from unique users of potentially the same recorded object.

The Enigma Camera relies on stereo matching, a process by which 3D information is extracted from digital images, frame by frame, for each pixel, separate from the image as a whole. While the intrinsic parameters of any camera are different, with the Enigma Camera we can pull the radial geometry of the actual camera lens itself. This allows our team to select a pixel on the screen and figure out where in space and time that pixel actually relates to the lens itself. By comparing this information between submissions of potentially the same UAP from two different vantage points, we can understand the relative position of an object in the two submissions.

Triangulating multiple viewpoints into one holistic UAP sighting report

Triangulating multiple users’ viewpoints

Our team has been studying how to coordinate capture among multiple cameras. We need to mutually agree on the universal time between submissions. We are using Network Time Protocol (NTP) to synchronize clocks across devices. In the process of recording a video a frame can get dropped here and there for myriad reasons. The synchronization that we do puts a stamp on every frame that says, "this is the agreed upon, universal time."

Using NTP and stereo matching, we can more confidently understand UAP. When two separate individuals submit media of a similar sighting, two viewpoints are discontinuous, and there's a gap in the timeline. Perhaps you start recording, you stop recording two minutes later, someone down the block starts a different recording a few minutes later. The Enigma Camera allows us to put together one continuous sighting of a UAP.

The team will be releasing the Enigma Camera in the coming days. And we are excited to share more about our metadata triangulation work in the next few months.